Run generative AI models on serverless

GPUs using your cloud credits

Run generative AI models on serverless

GPUs using your cloud credits

Run generative AI models on serverless

GPUs using your cloud credits

Run generative AI models on serverless GPUs using your cloud credits

If you’re a seed stage startup with <$500K in funding, you may be eligible to use Tensorfuse for free for 6 months. This deal works well with your existing cloud credits allowing you to run gpu applications for free.

Trusted infra partner for high growth startups

We were having a lot of annoying issues running our model on a SageMaker endpoint (latency increasing over time, timeouts, instances getting overloaded, etc), and we saw that Tensorfuse could potentially be a huge help for us. We reached out and they were very helpful. The Tensorfuse team met with me and walked me through setting up their product.

Jake Yatvitskiy

Co-founder, Haystack (YC S24)

Tensorfuse has been a tremendous asset for ForEffect. With Tensorfuse, I can rapidly deploy models within our own environment has greatly accelerated development while cutting costs. Running server less GPUs on our cloud is now incredibly smooth and efficient. Honestly, I don't see why anyone would go with Runpods or other hosted GPU providers over Tensorfuse

Albert Jo

Founder, ForEffect (YC W24)

The Tensorfuse team and product has been great for us. They were super helpful in helping us migrate, and it's a pretty easy process. It helped us remove a ton of our own patched together DevOps and free up an engineer.

Omnisync AI (YC W19)

The team has gone above and beyond onboarding us and supporting us, at all hours of the day. Super easy to set up, would recommend.

Jaochim Faiberg

Co-founder and CTO The Forecasting Company (YC S24)

We were having a lot of annoying issues running our model on a SageMaker endpoint (latency increasing over time, timeouts, instances getting overloaded, etc), and we saw that Tensorfuse could potentially be a huge help for us. We reached out and they were very helpful. The Tensorfuse team met with me and walked me through setting up their product.

Jake Yatvitskiy

Co-founder, Haystack (YC S24)

Tensorfuse has been a tremendous asset for ForEffect. With Tensorfuse, I can rapidly deploy models within our own environment has greatly accelerated development while cutting costs. Running server less GPUs on our cloud is now incredibly smooth and efficient. Honestly, I don't see why anyone would go with Runpods or other hosted GPU providers over Tensorfuse

Albert Jo

Founder, ForEffect (YC W24)

The Tensorfuse team and product has been great for us. They were super helpful in helping us migrate, and it's a pretty easy process. It helped us remove a ton of our own patched together DevOps and free up an engineer.

Omnisync AI (YC W19)

The team has gone above and beyond onboarding us and supporting us, at all hours of the day. Super easy to set up, would recommend.

Jaochim Faiberg

Co-founder and CTO The Forecasting Company (YC S24)

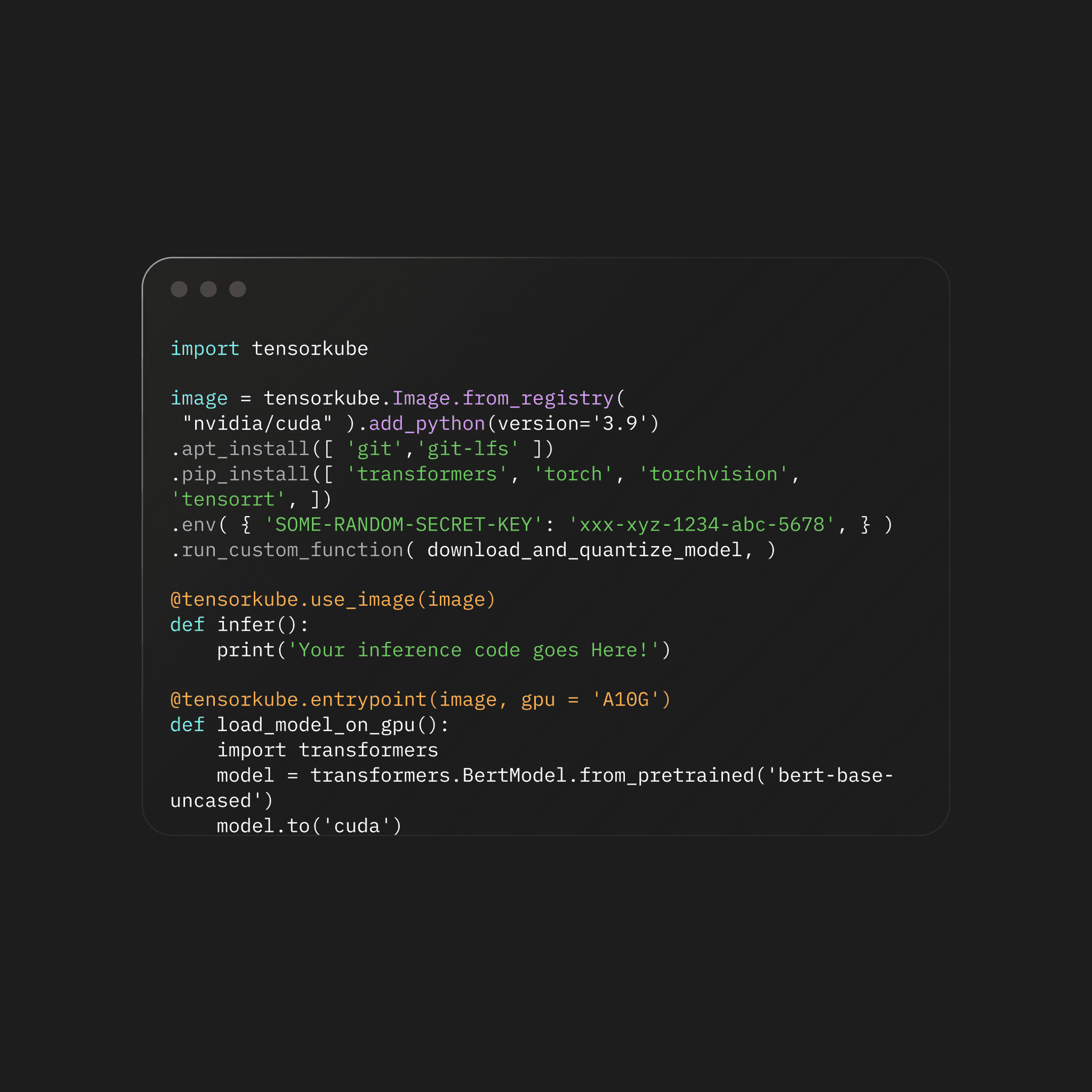

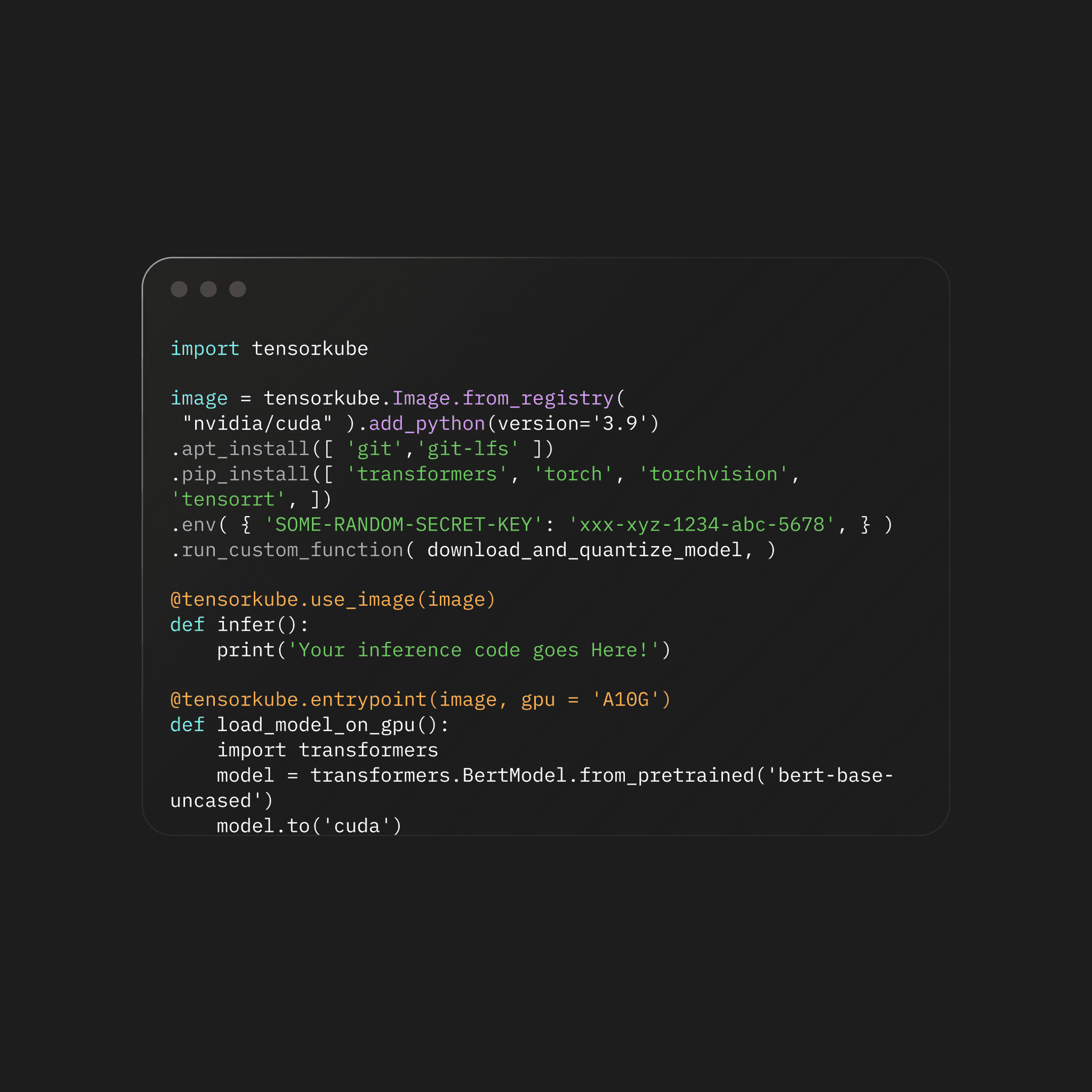

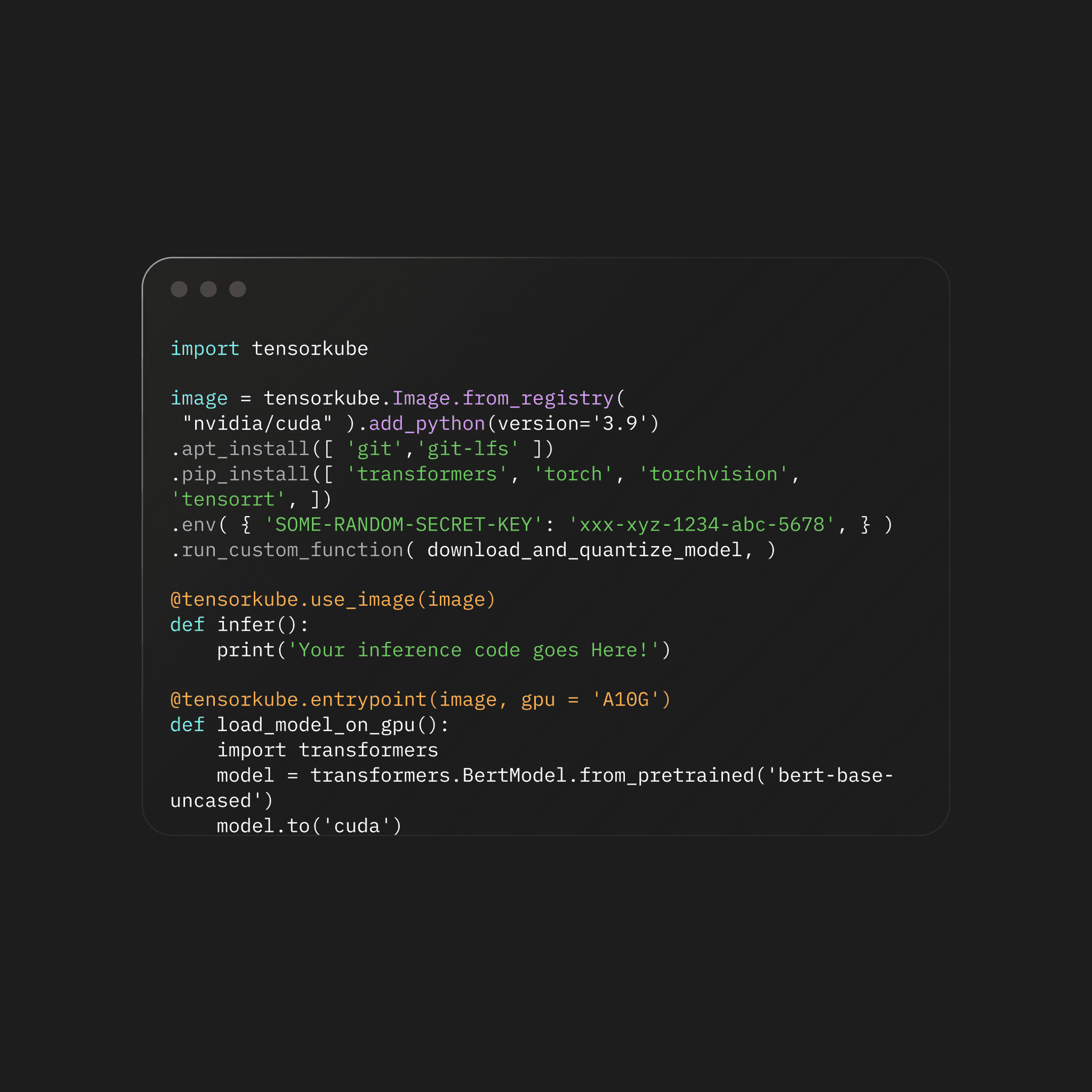

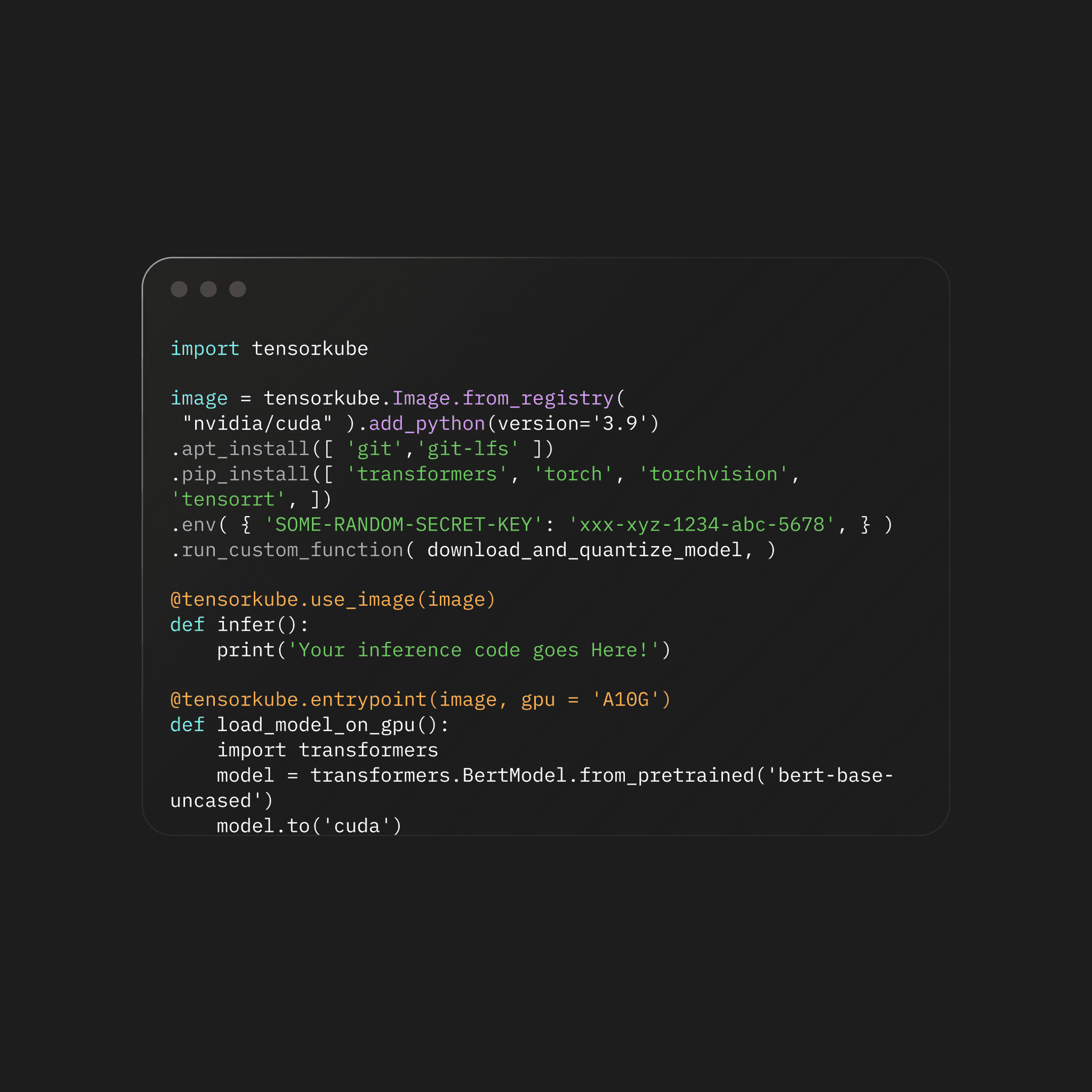

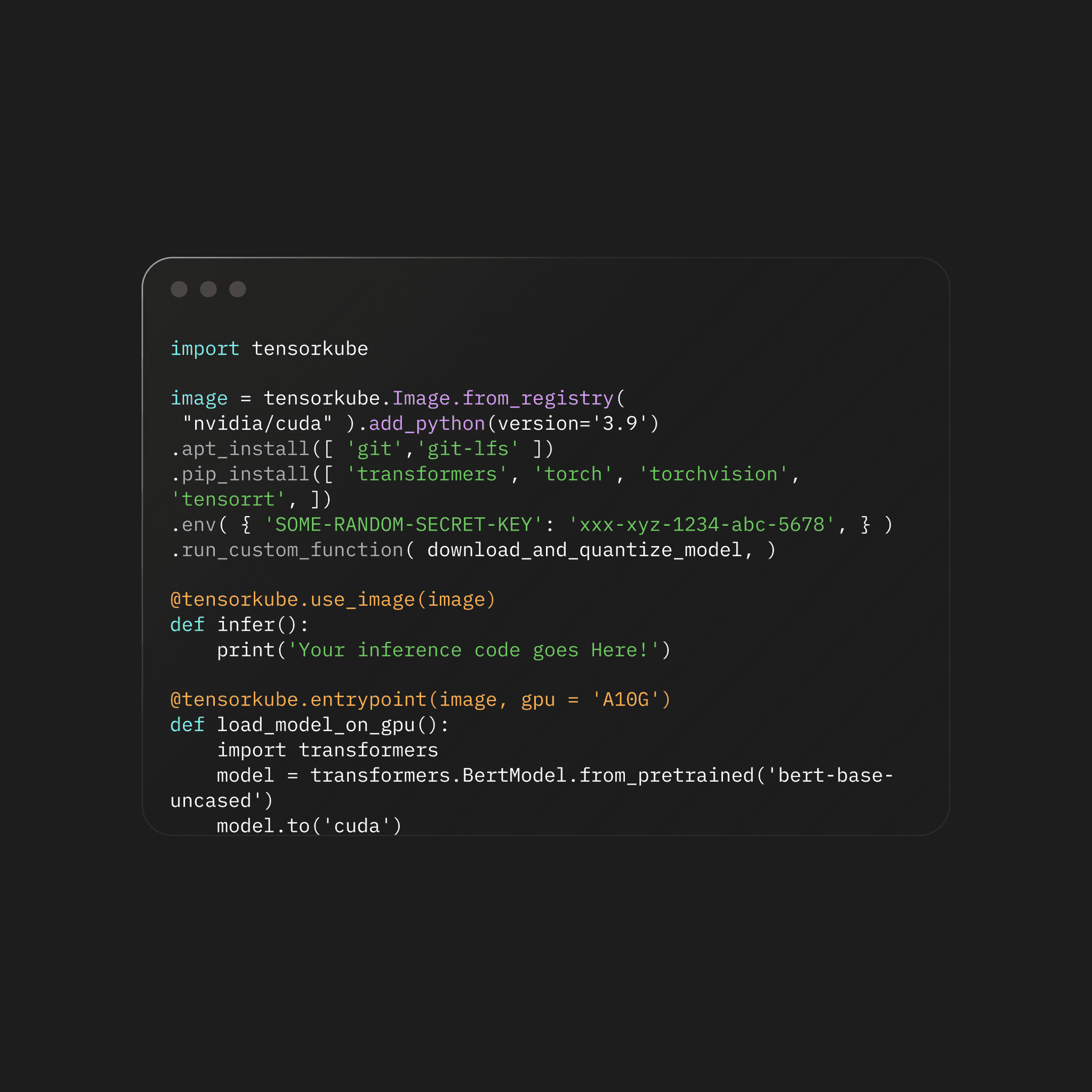

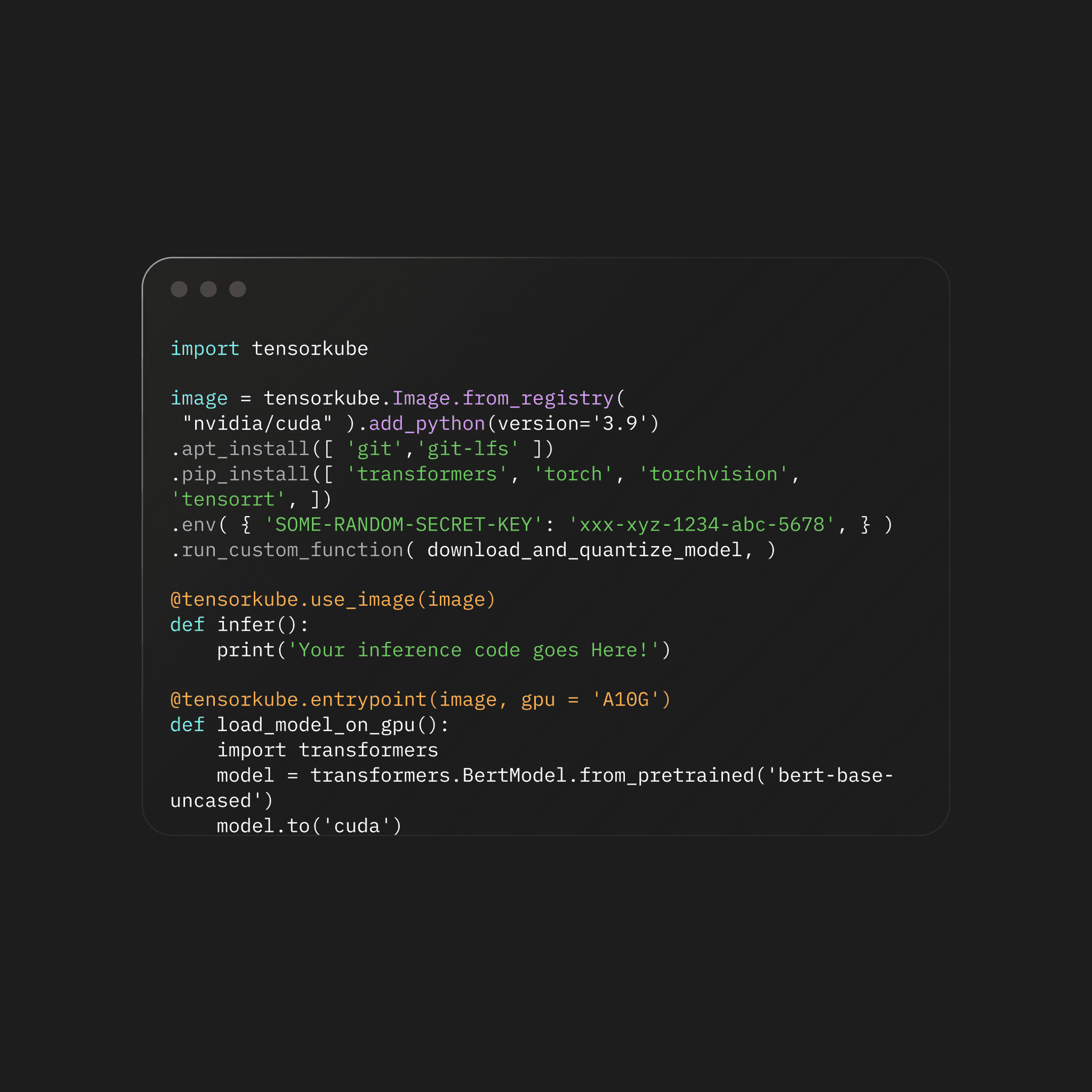

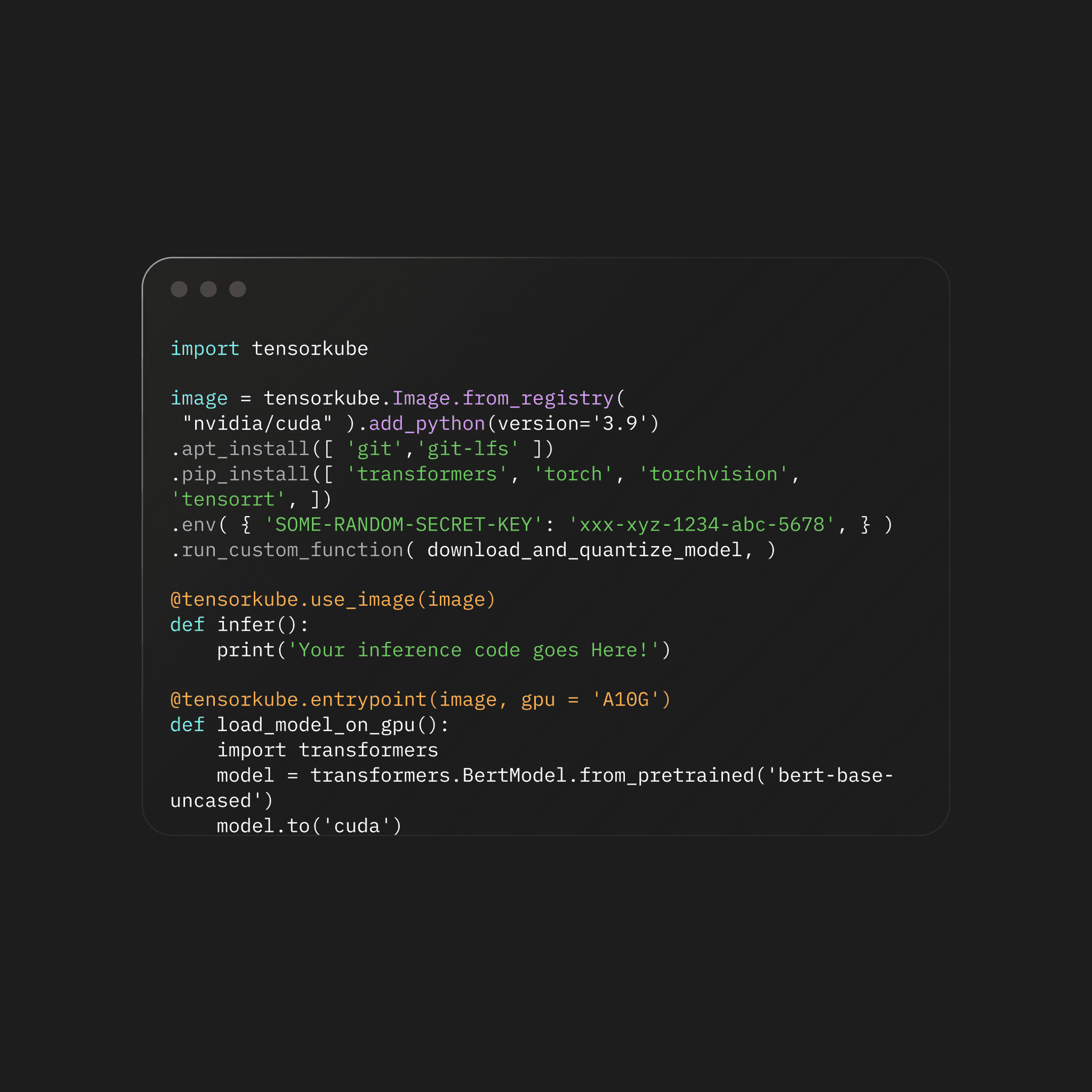

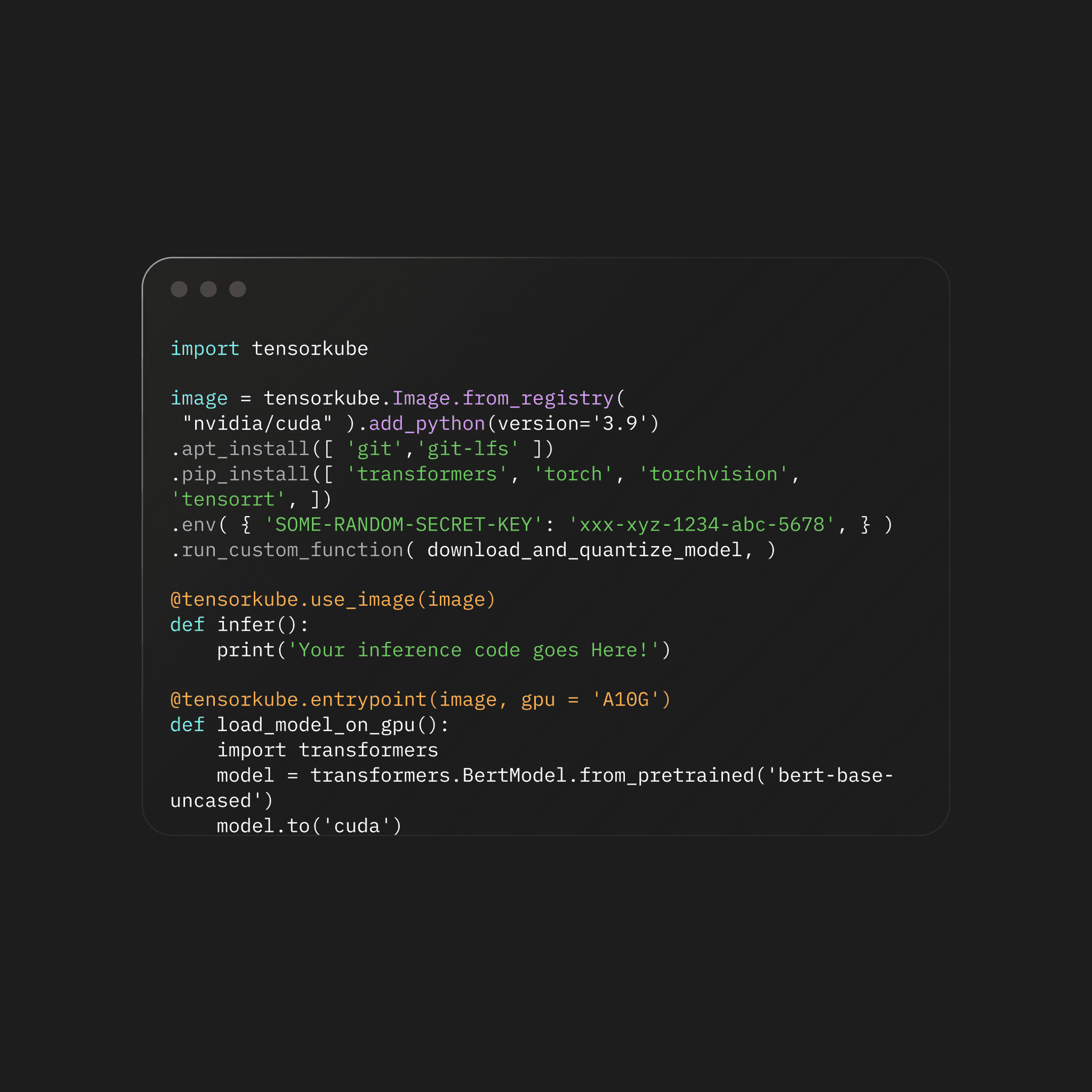

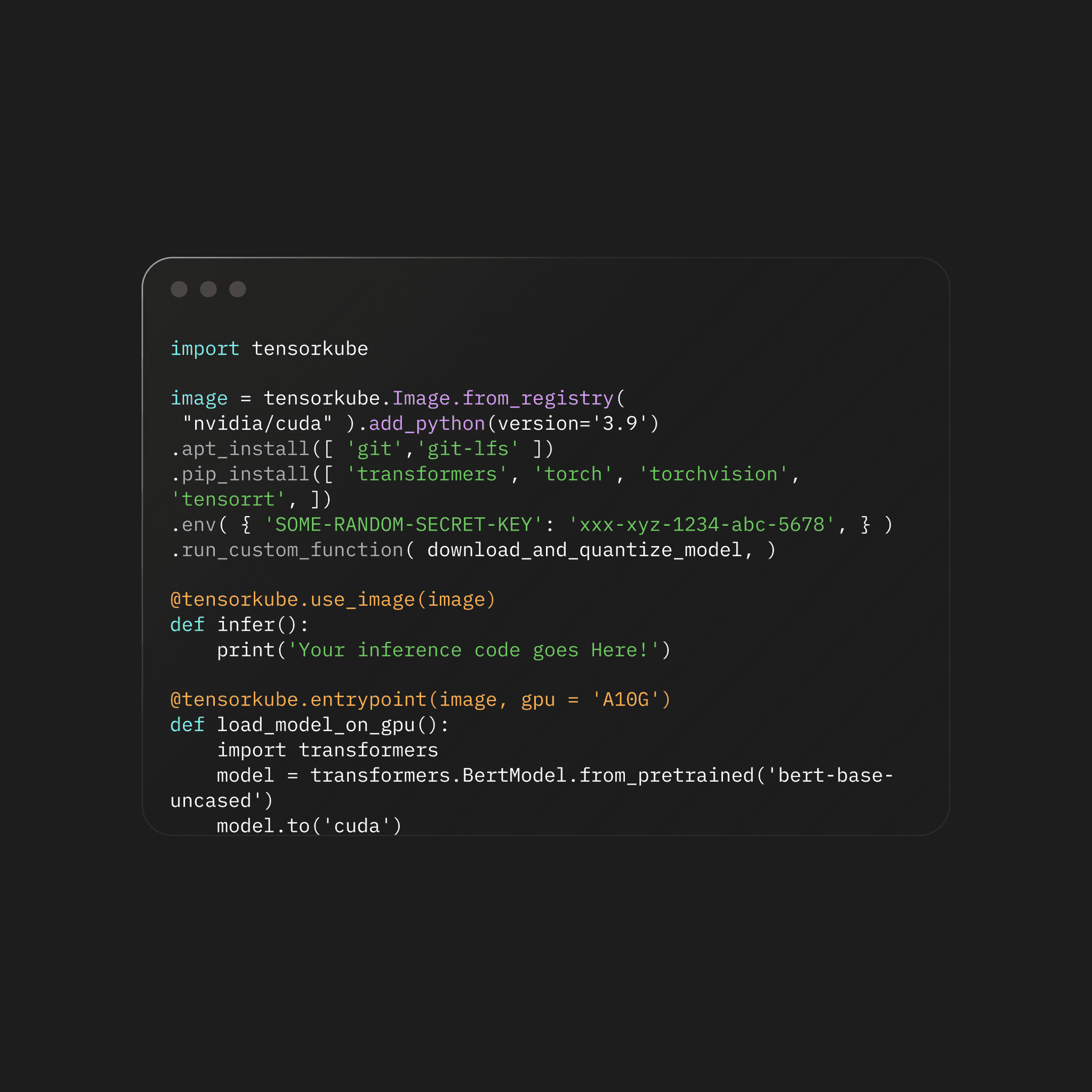

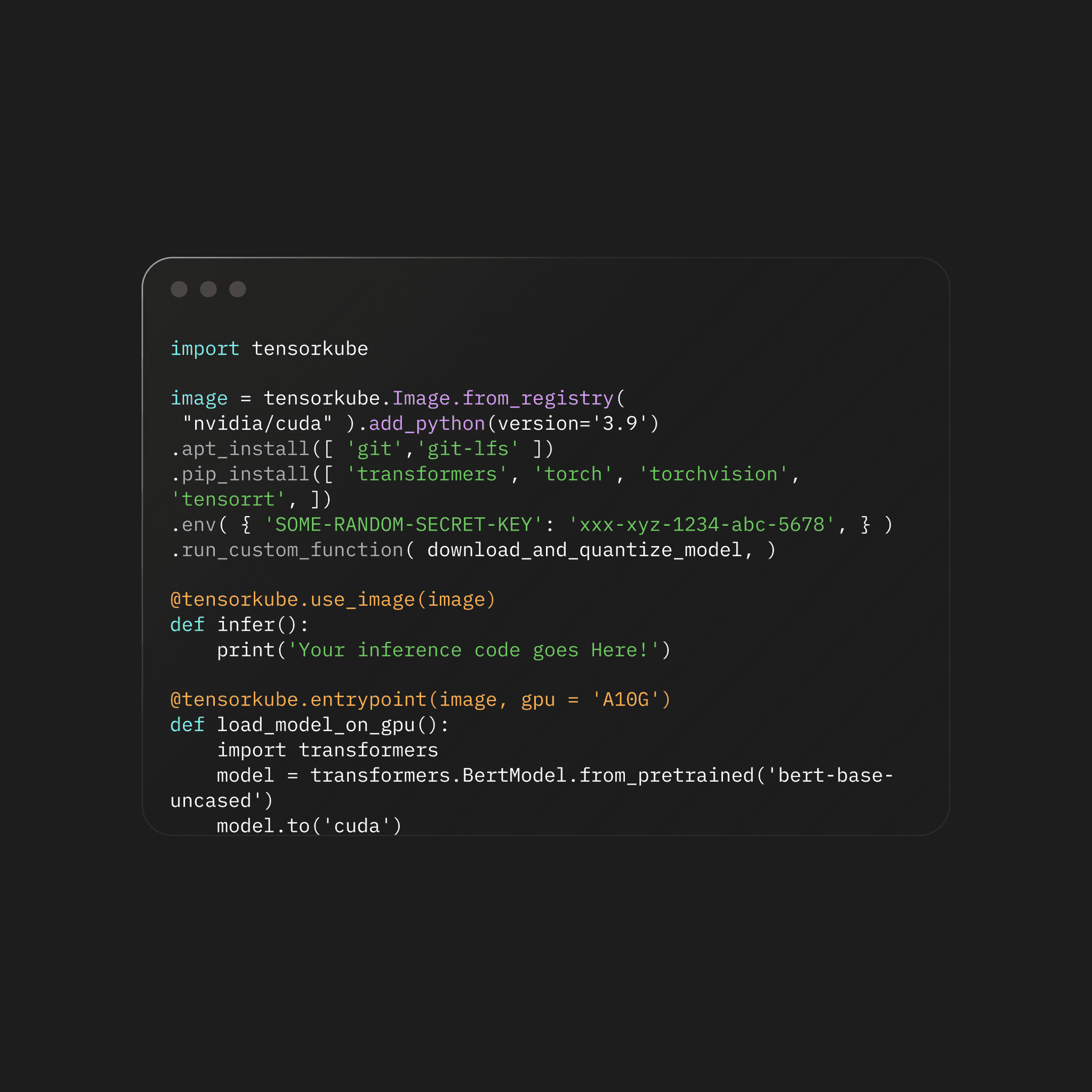

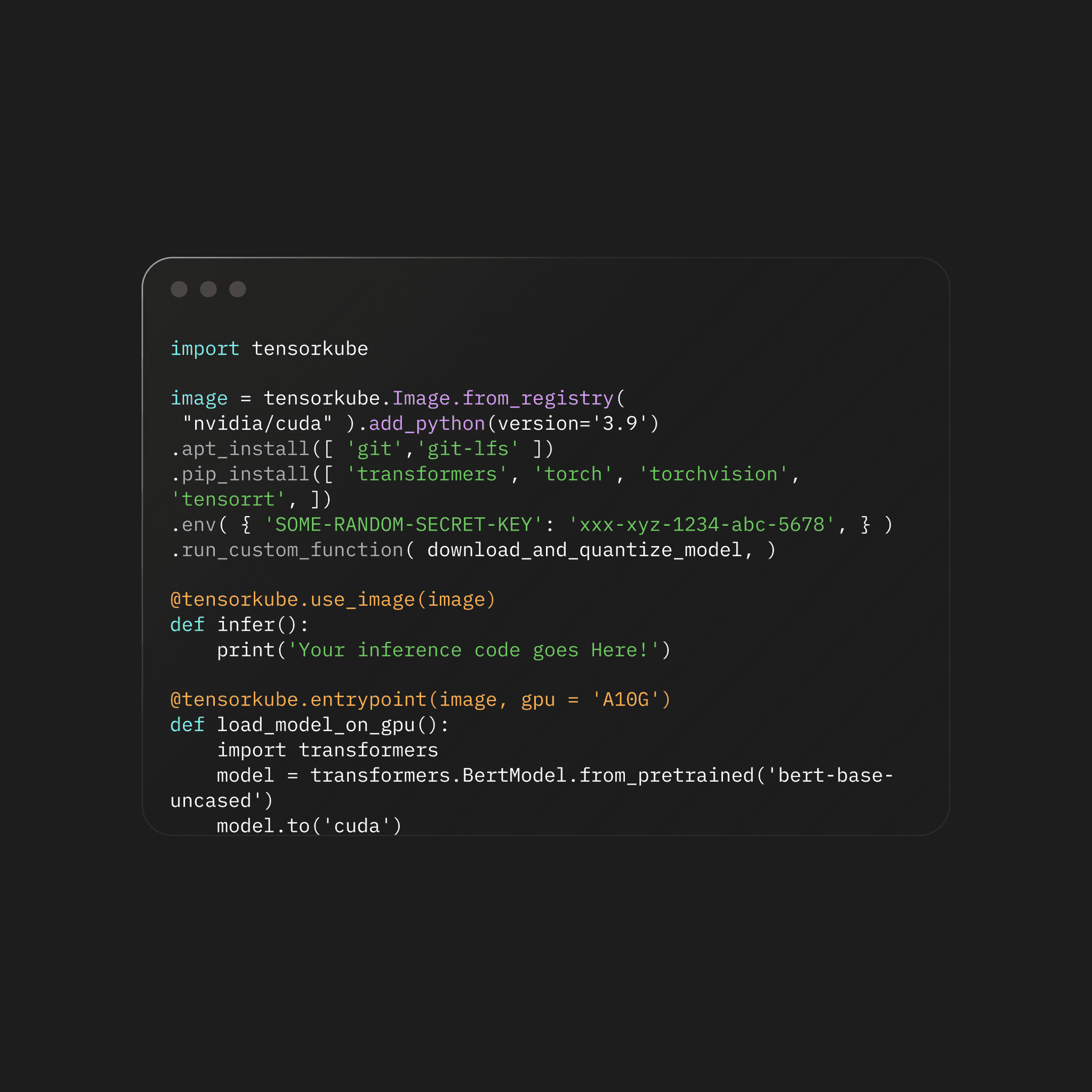

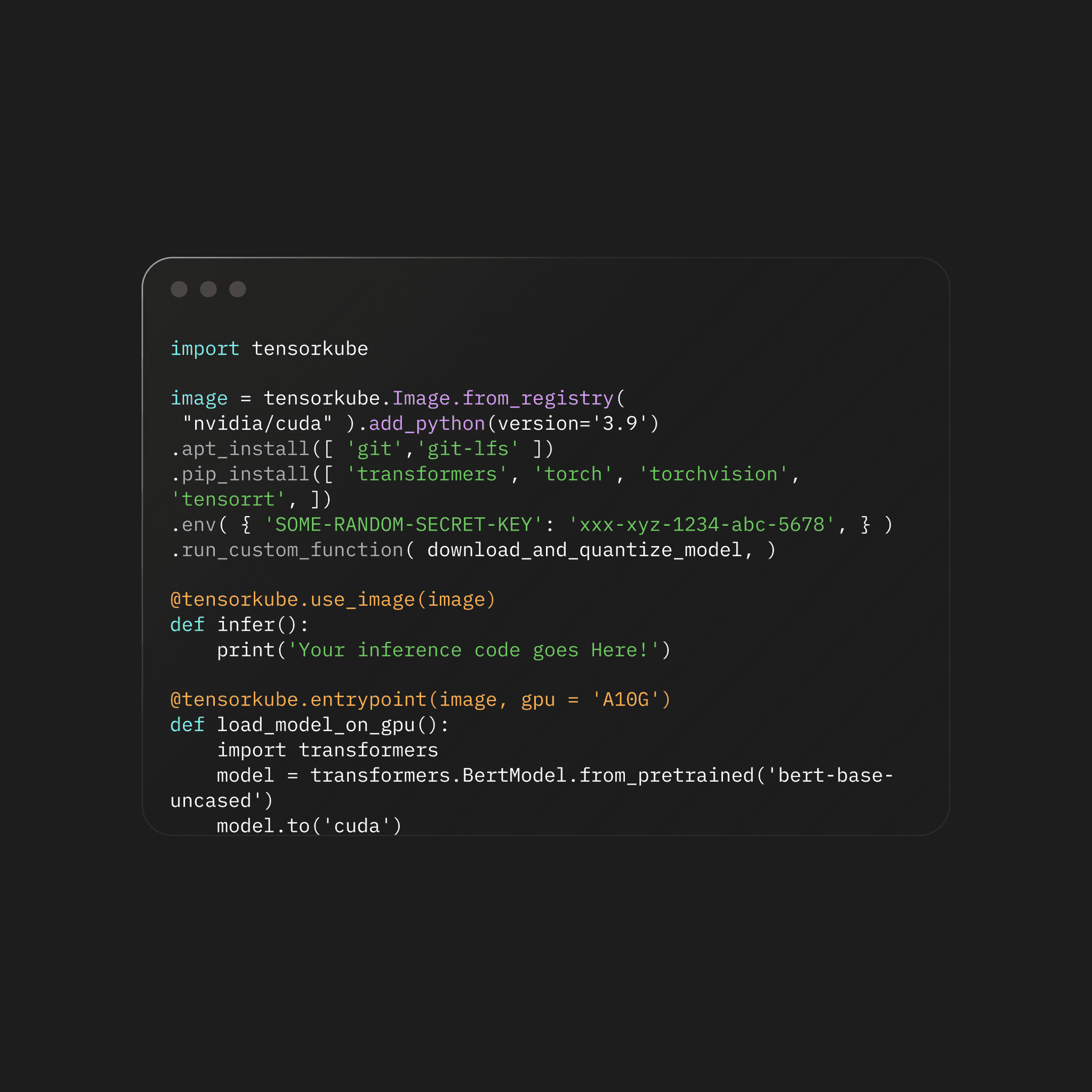

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

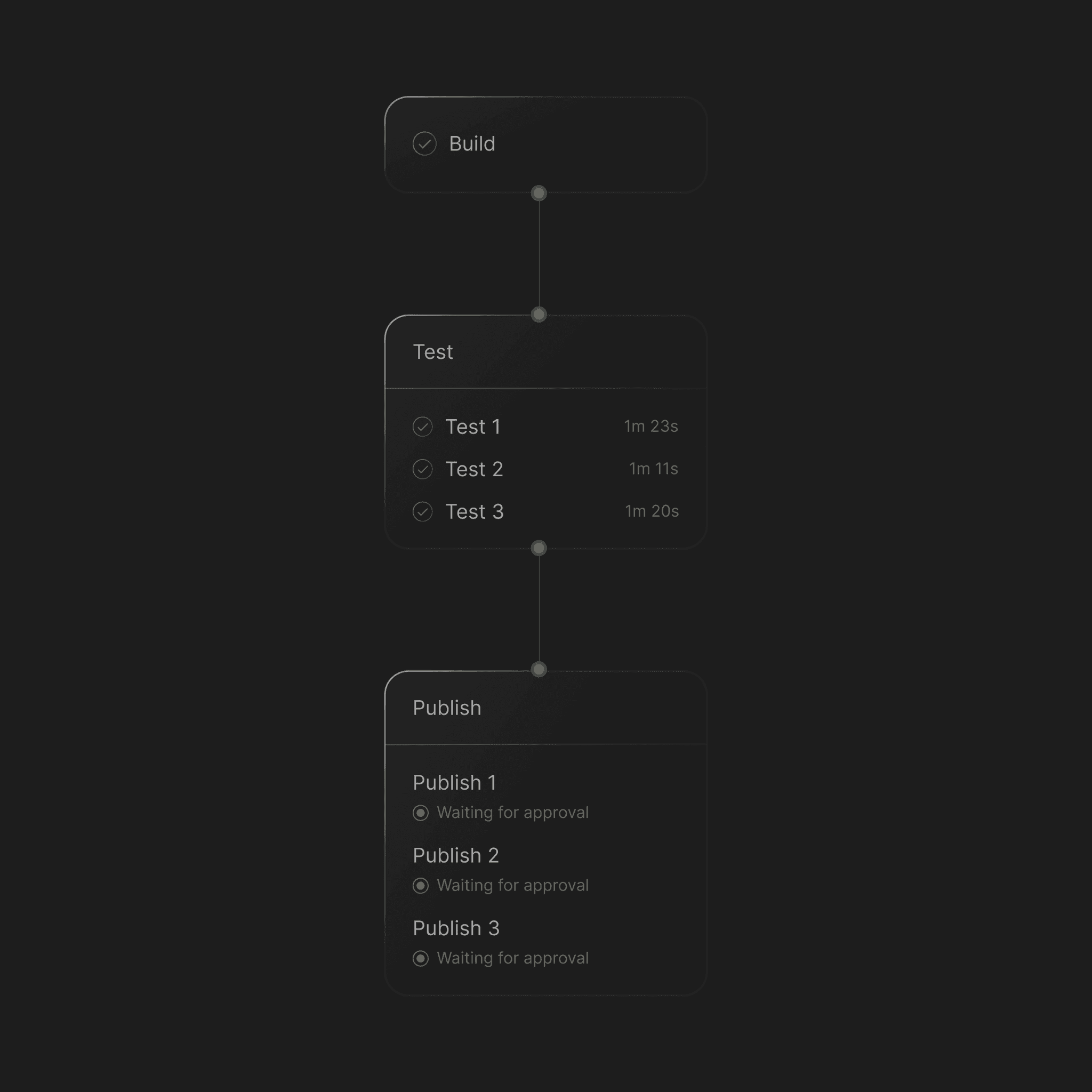

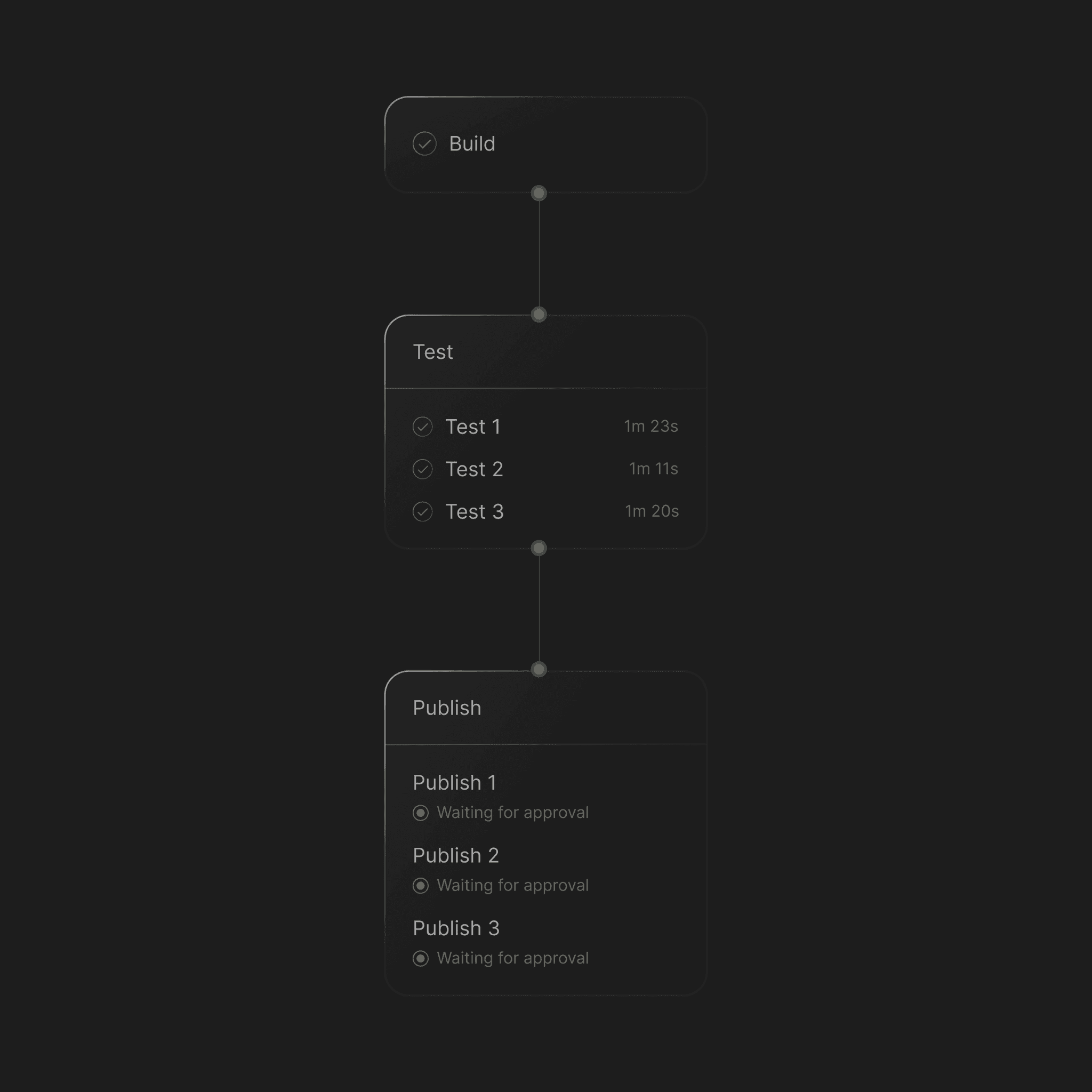

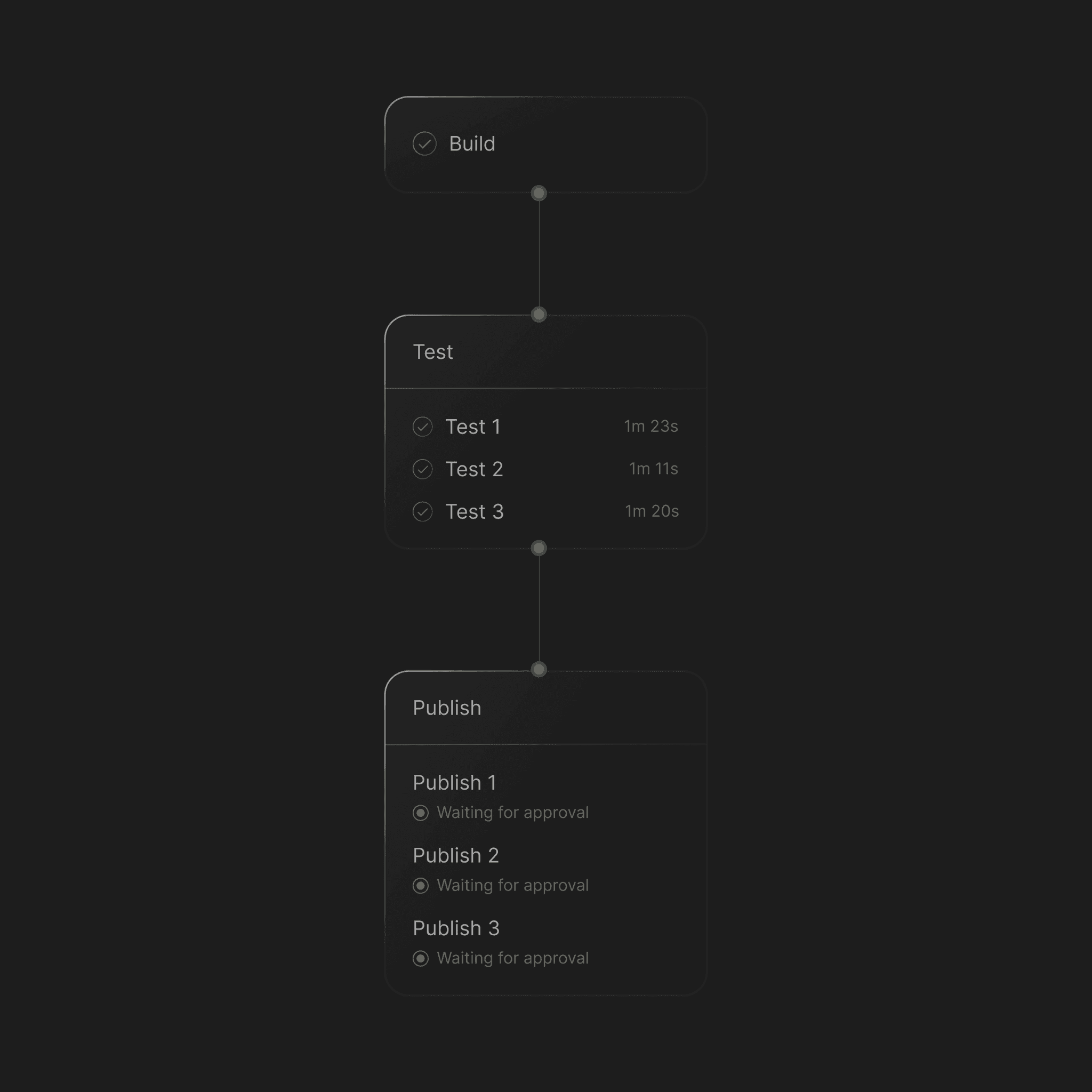

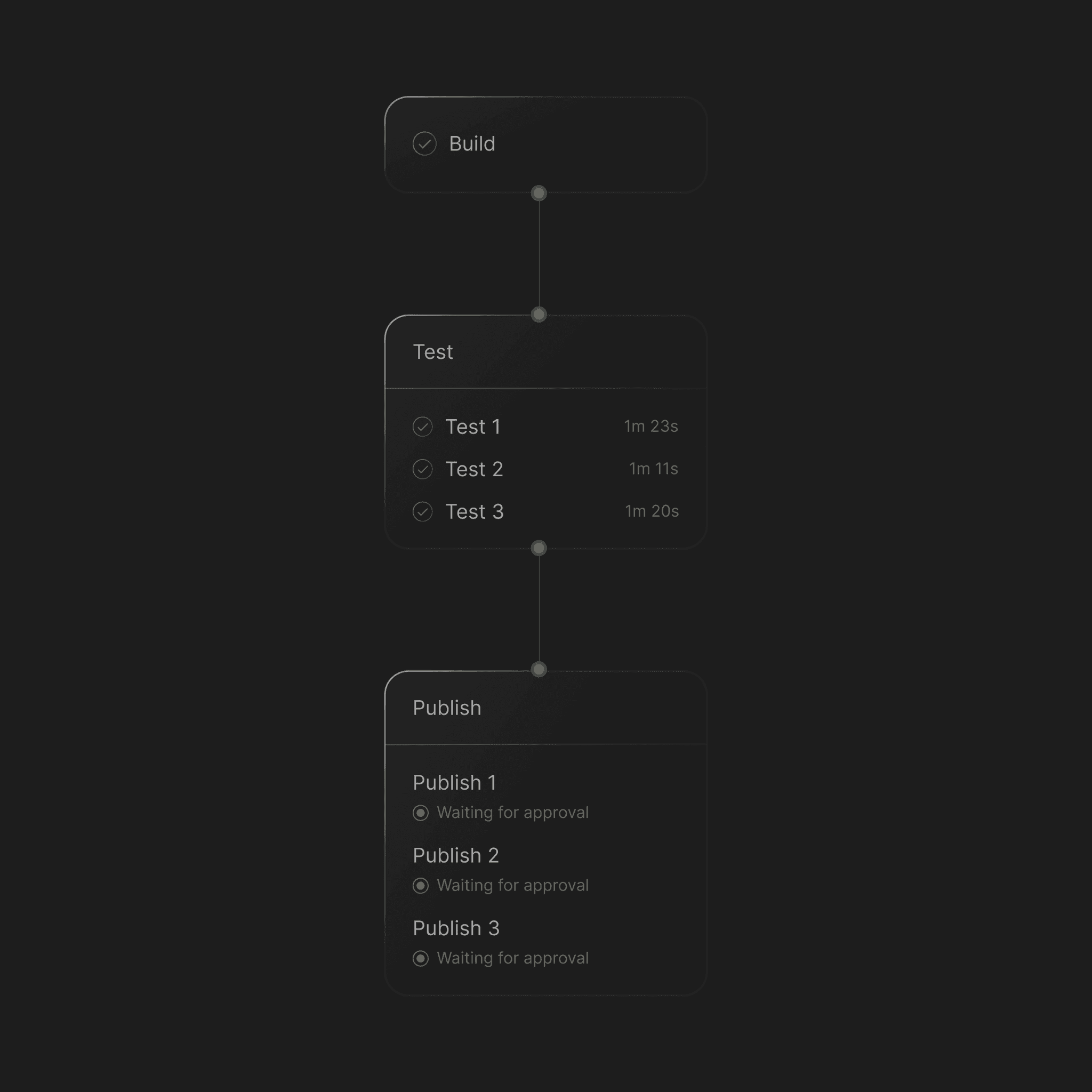

Github Actions

Automate builds using github actions

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

Github Actions

Automate builds using github actions

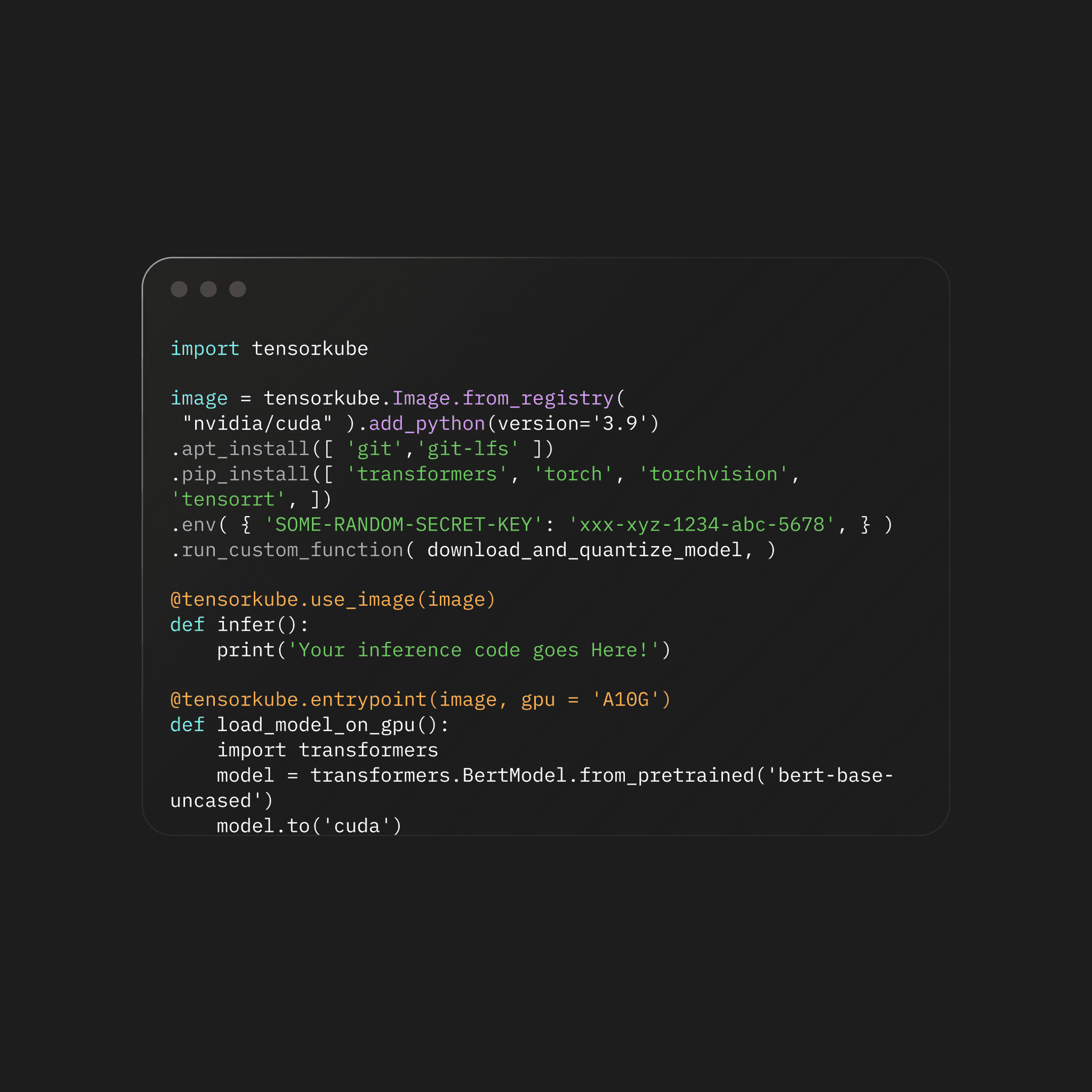

Depoly in minutes,

Scale in seconds

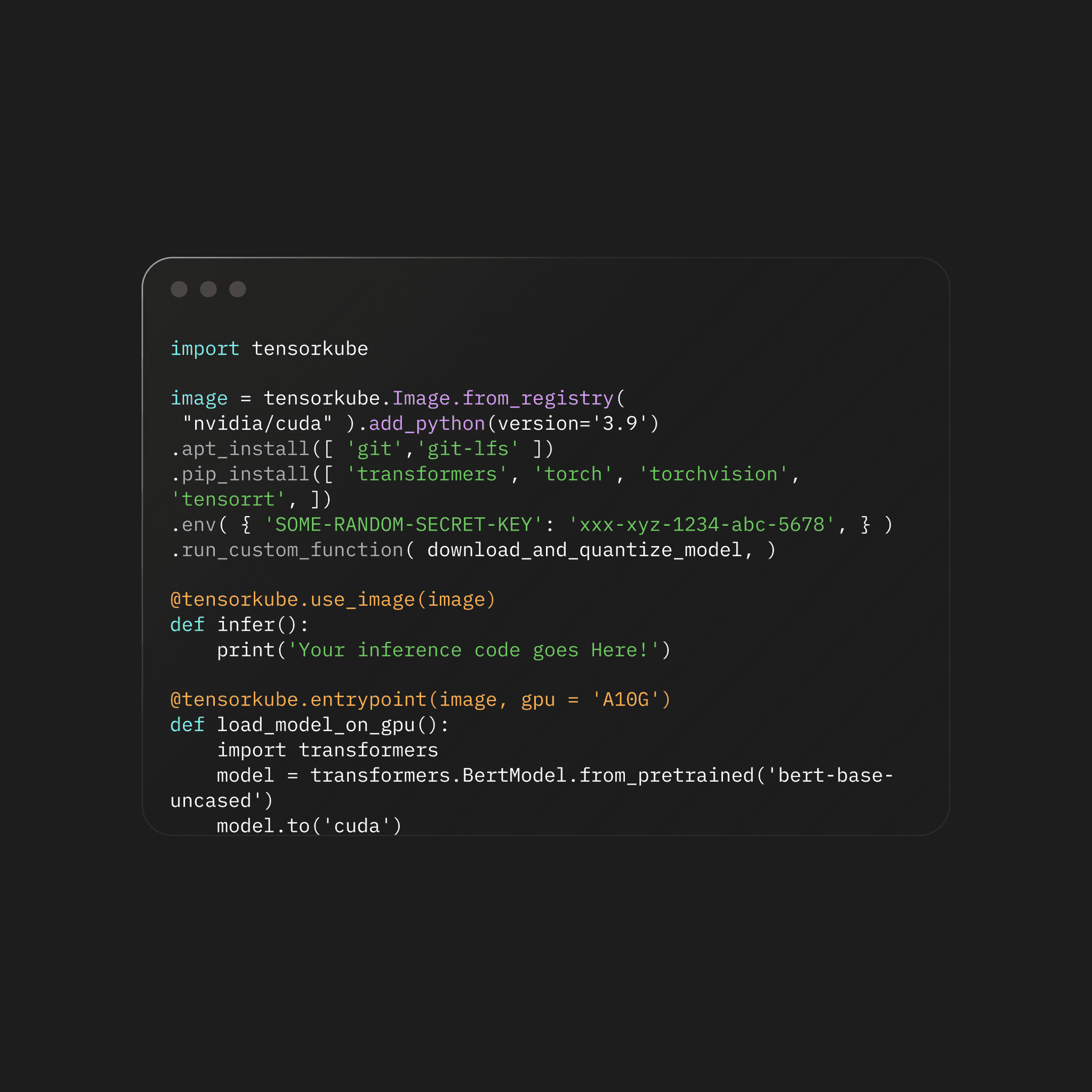

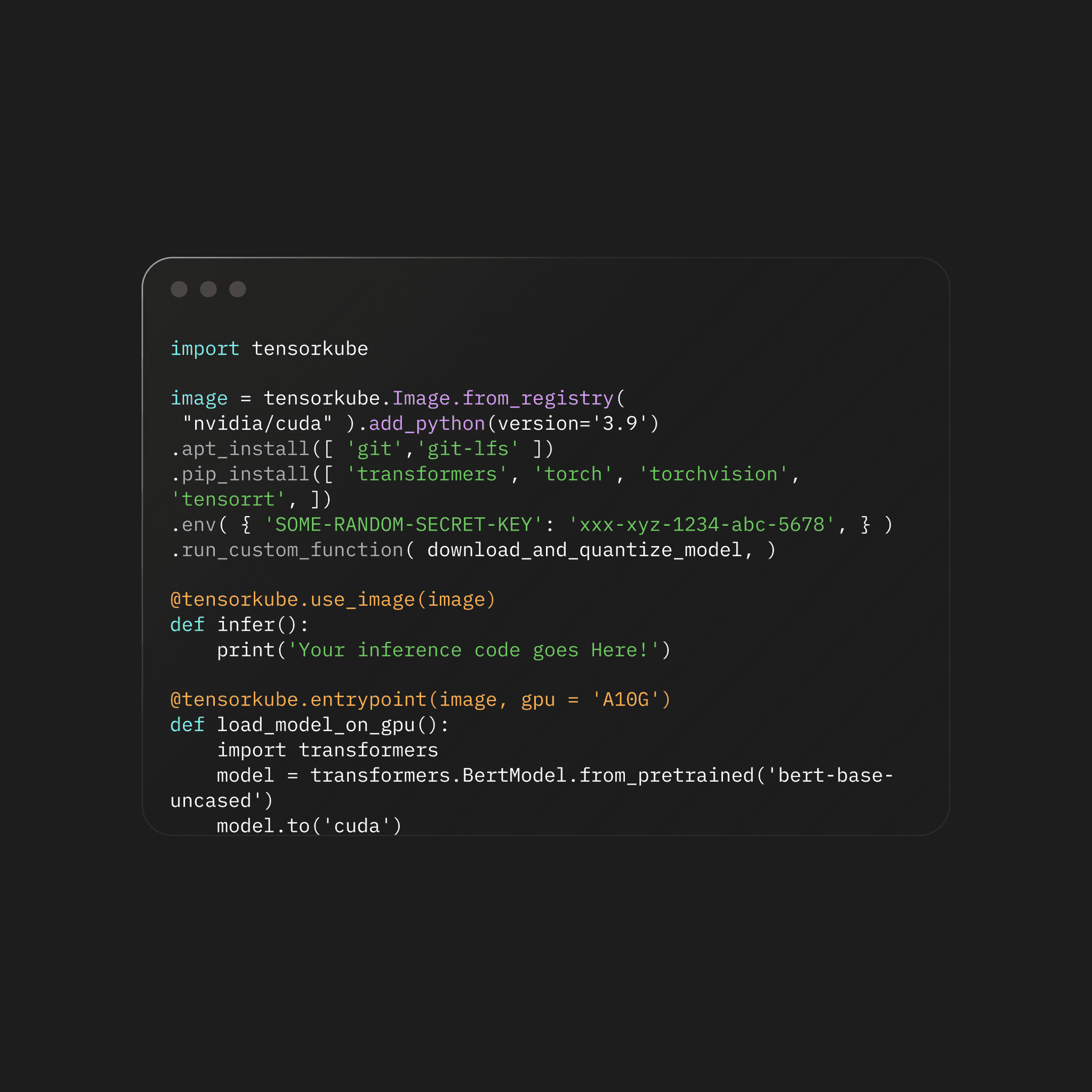

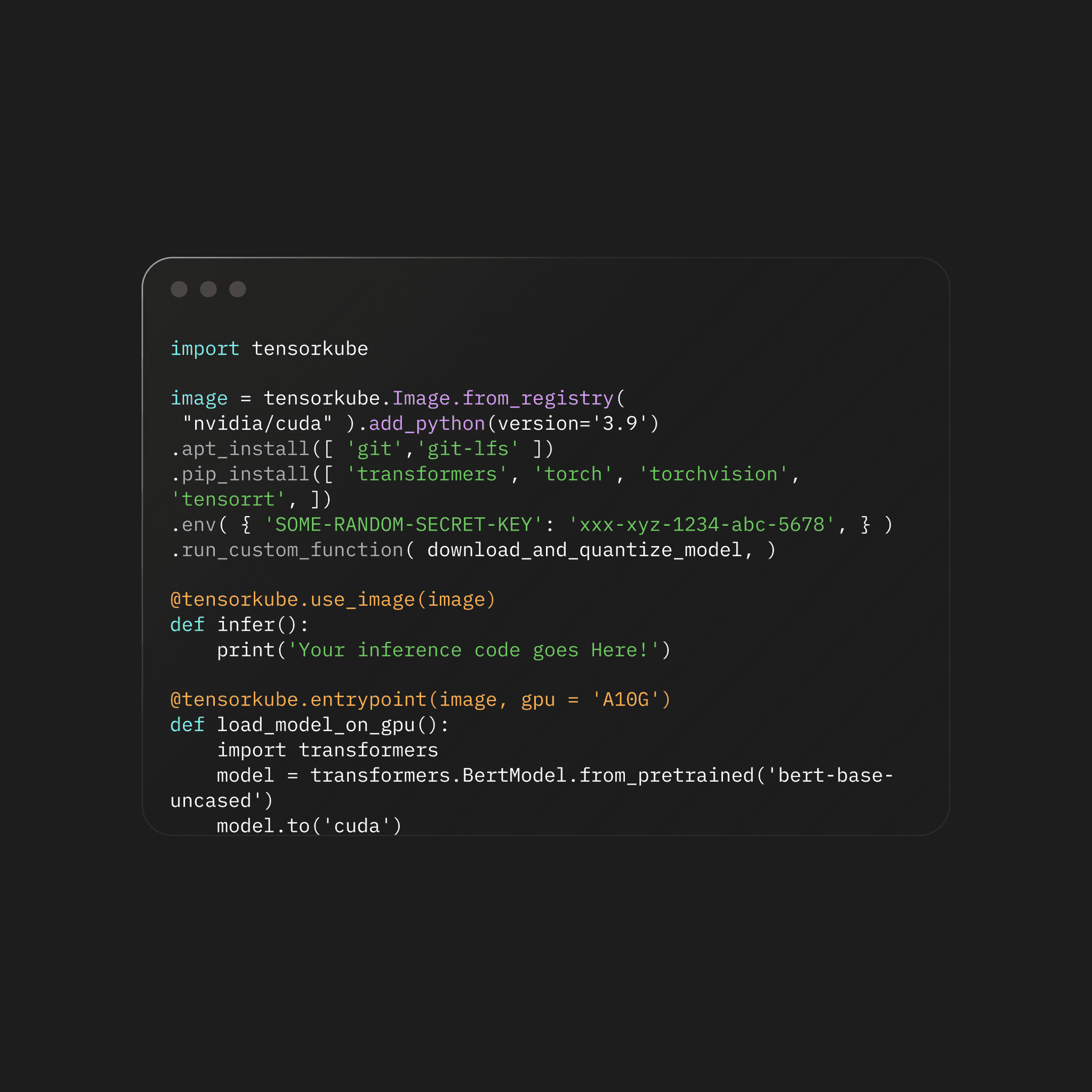

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

Github actions

Automate builds using github actions

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

Github actions

Automate builds using github actions

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

Github actions

Automate builds using github actions

Depoly in minutes,

Scale in seconds

Get started with Tensorfuse today.

Accelerate time to market of your AI app without any DevOps overhead

Get started with

Tensorfuse today.

Accelerate time to market of your AI app without any DevOps overhead

Get started with Tensorfuse today.

Accelerate time to market of your AI app without any DevOps overhead

Get started with Tensorfuse today.

Accelerate time to market of your AI app without any DevOps overhead

Get started with Tensorfuse today.

Accelerate time to market of your AI app without any DevOps overhead

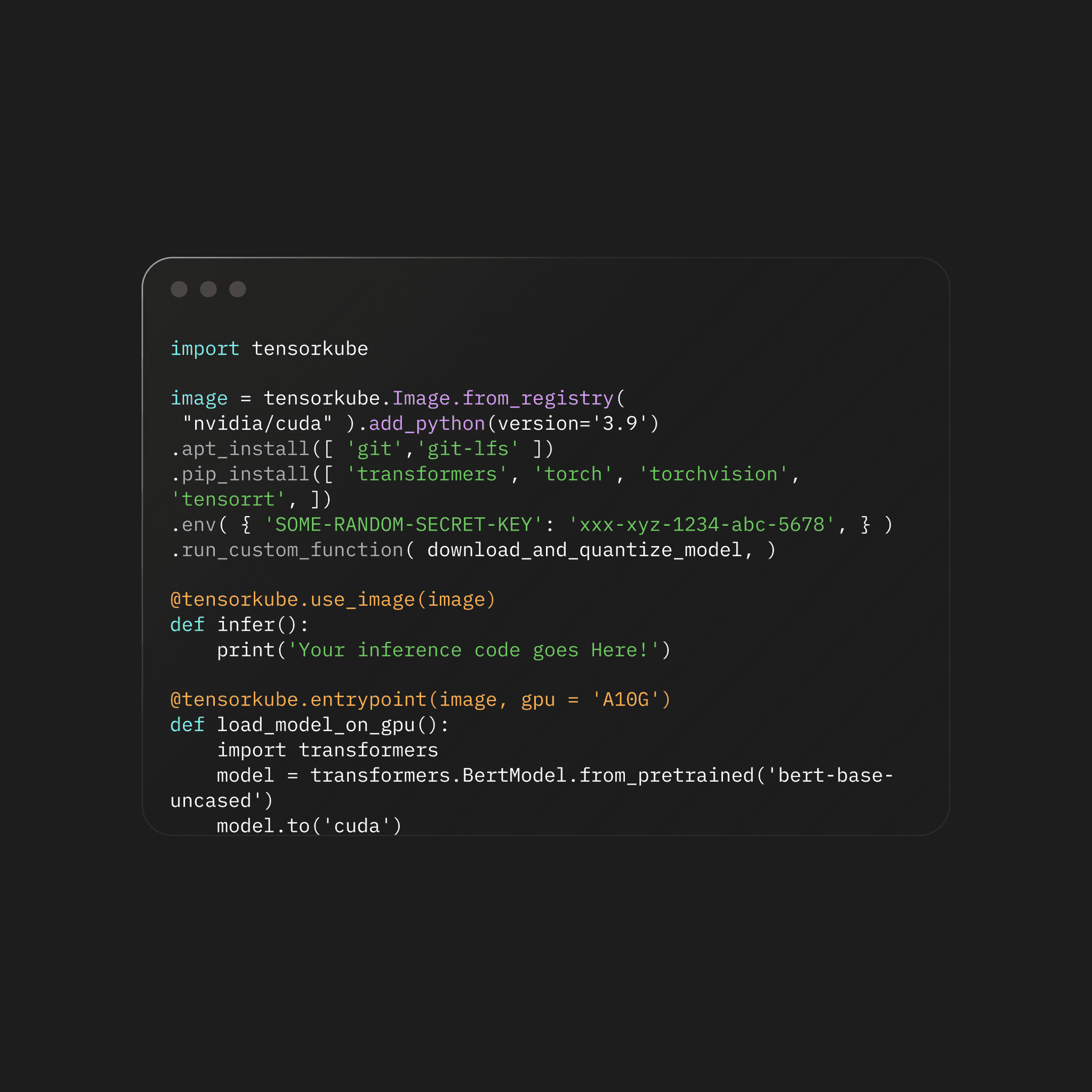

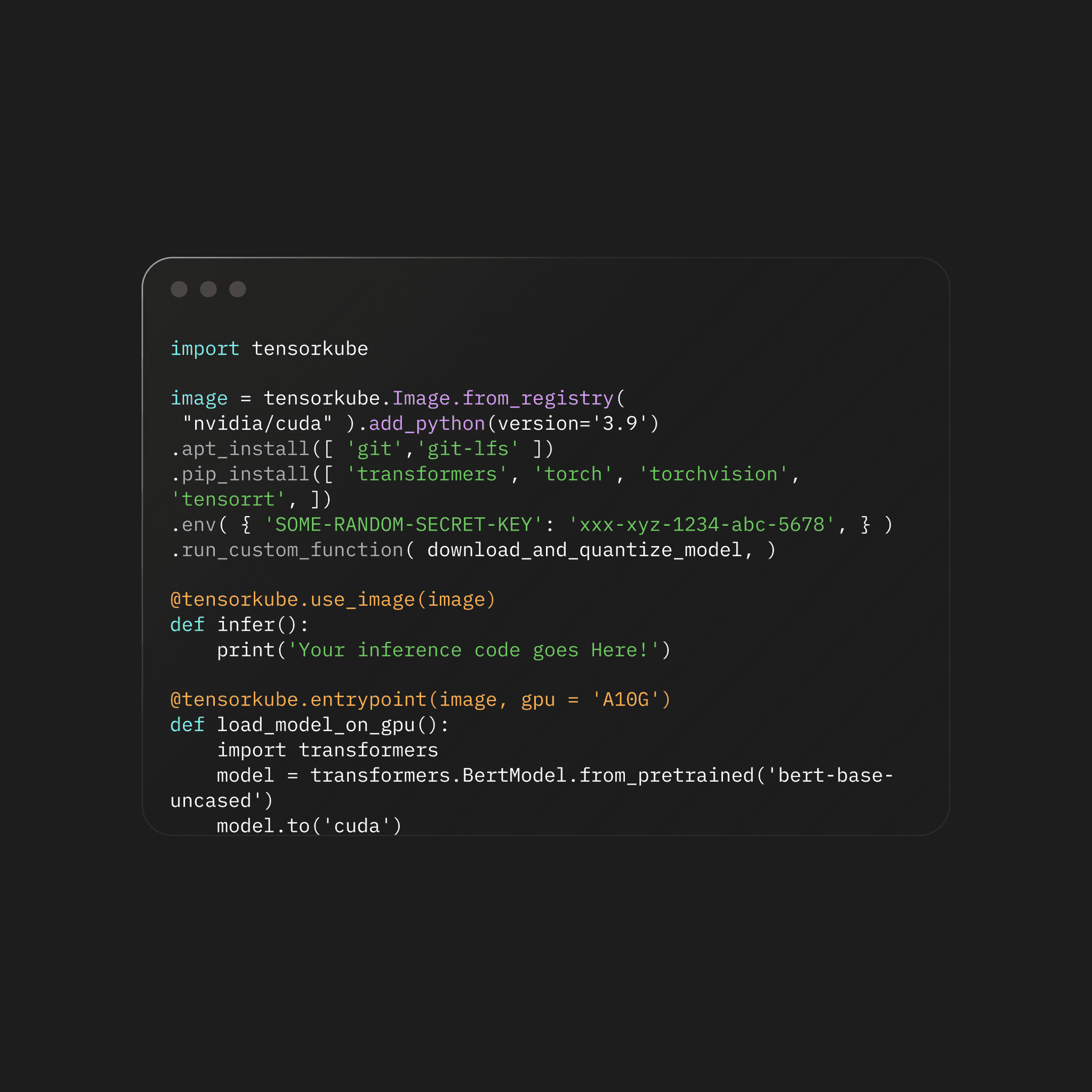

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

Github Actions

Automate builds using github actions

Connect your cloud account

Configure the tensorfuse runtime with a single command to get started with deployments

Deploy and autoscale

Deploy ML models to your own cloud and start using them via an OpenAI compatible API

Github Actions

Automate builds using github actions